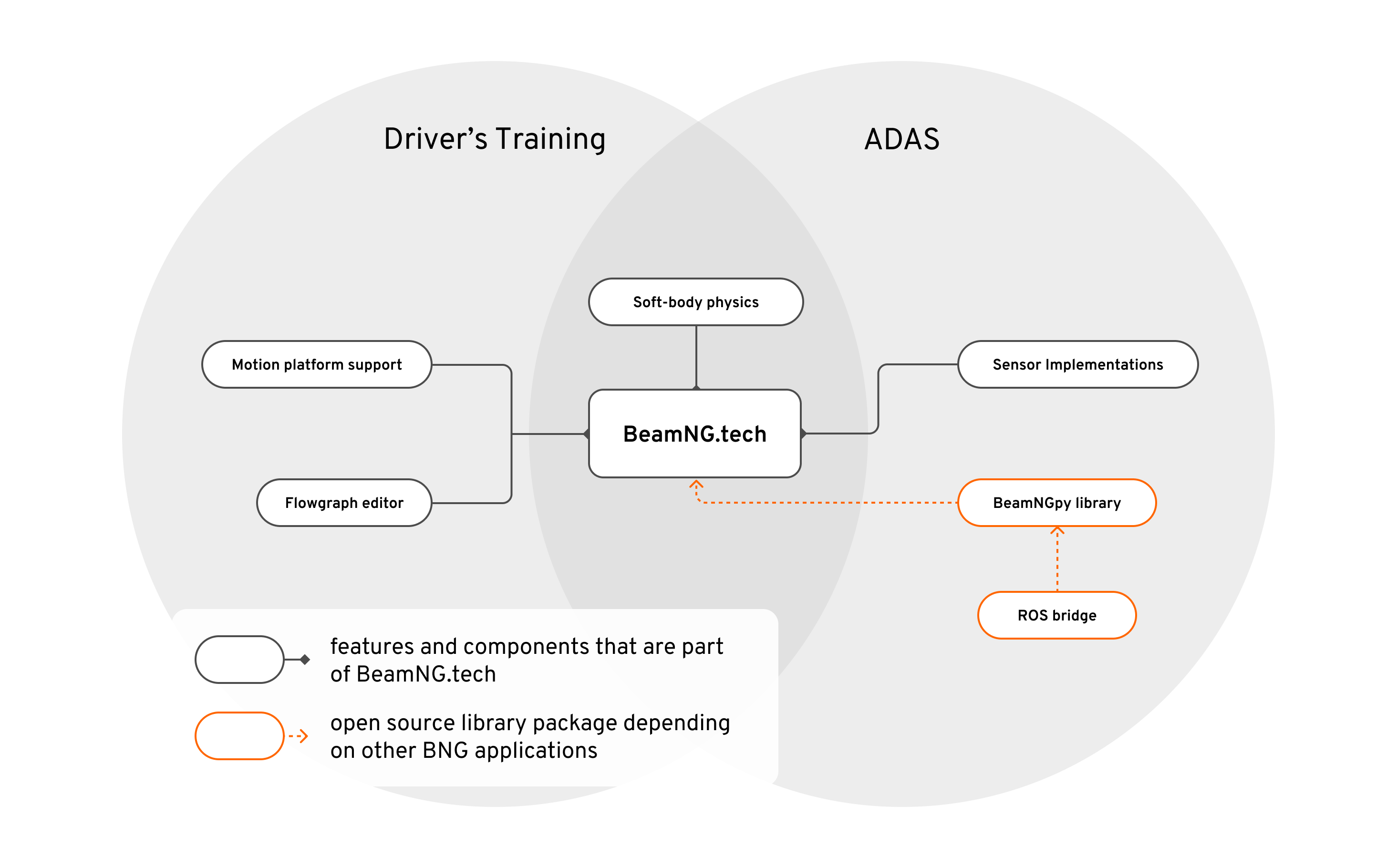

In support of SOTIF’s validation and verification approach, we rely on the scenario-based development paradigm. This allows the user to quickly and systematically collect data and evaluate systems in clear-cut conditions, either programmatically or by using our Flowgraph editor that does not require any previous programming experience. To facilitate interoperability with other frameworks we also provide the freely available python interface BeamNGpy.

Be it by selecting from our large variety of vehicle models and levels, or by integrating your own models into the simulation, BeamNG helps you bring your product on the way to completion.

Sensors

Camera

We extended the classic camera sensor to include a collection of additional information. This allows it to extract as much information as possible from the simulation. Besides RGB images, it provides pixel-perfect information about depth, objects classes and object instances. It is also easily parameterized with regard to the classical camera settings such as field of view and resolution and can be attached to any part of the vehicle to match your technology demonstrator.

Lidar

Our sensor implementation mimics the behavior of real Lidar sensors. As a rotational Lidar, it generates a point cloud by relying on ray-tracing. Just like any of our sensors, it is highly customizable and generates a perfect scan of the environment. Soon it will also provide ground truths along the generated point clouds, accelerating your research and product development.

IMU

Our IMU sensor captures all the details of the agent’s driving dynamics. While the other sensors provide the data for intelligent decision making, the IMU sensor is suitable to take into account the comfort of any vehicle passenger. For non-emergency systems a steady driving behavior is essential for its successful deployment. Due to our simulation generating detailed vehicle dynamics, the IMU sensor provides the necessary means to develop a product taking into account the customer.

Damage Sensor

Unique to BeamNG.tech, the damage sensor provides comprehensive information about the state of the vehicle. With this sensor it is not only possible to assess the state of the outer vehicle parts but also the state of the individual powertrain components. Although lacking a real-world counterpart, this sensor is nevertheless an indispensable tool in assessing the quality of any autonomous driving assistant without putting any real-world hardware at risk.